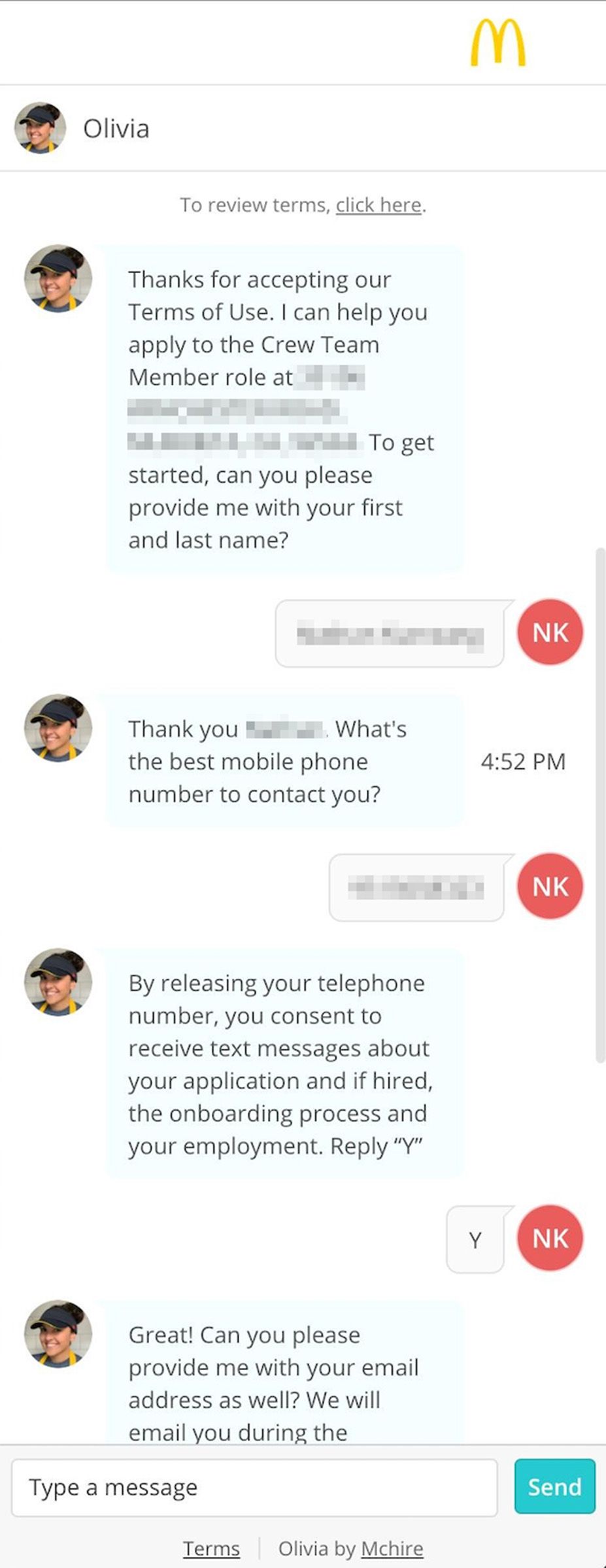

If you want a job at McDonald’s today, there’s a good chance you’ll have to talk to Olivia. Olivia is not, in fact, a human being, but instead an AI chatbot that screens applicants, asks for their contact information and résumé, directs them to a personality test, and occasionally makes them “go insane” by repeatedly misunderstanding their most basic questions.

Until last week, the platform that runs the Olivia chatbot, built by artificial intelligence software firm Paradox.ai, also suffered from absurdly basic security flaws. As a result, virtually any hacker could have accessed the records of every chat Olivia had ever had with McDonald’s applicants—including all the personal information they shared in those conversations—with tricks as straightforward as guessing the username and password “123456.”

On Wednesday, security researchers Ian Carroll and Sam Curry revealed that they found simple methods to hack into the backend of the AI chatbot platform on McHire.com, McDonald’s website that many of its franchisees use to handle job applications. Carroll and Curry, hackers with a long track record of independent security testing, discovered that simple web-based vulnerabilities—including guessing one laughably weak password—allowed them to access a Paradox.ai account and query the company’s databases that held every McHire user’s chats with Olivia. The data appears to include as many as 64 million records, including applicants’ names, email addresses, and phone numbers.

Carroll says he only discovered that appalling lack of security around applicants’ information because he was intrigued by McDonald’s decision to subject potential new hires to an AI chatbot screener and personality test. “I just thought it was pretty uniquely dystopian compared to a normal hiring process, right? And that’s what made me want to look into it more,” says Carroll. “So I started applying for a job, and then after 30 minutes, we had full access to virtually every application that’s ever been made to McDonald’s going back years.”

When WIRED reached out to McDonald’s and Paradox.ai for comment, a spokesperson for Paradox.ai shared a blog post the company planned to publish that confirmed Carroll and Curry’s findings. The company noted that only a fraction of the records Carroll and Curry accessed contained personal information, and said it had verified that the account with the “123456” password that exposed the information “was not accessed by any third party” other than the researchers. The company also added that it’s instituting a bug bounty program to better catch security vulnerabilities in the future. “We do not take this matter lightly, even though it was resolved swiftly and effectively,” Paradox.ai’s chief legal officer, Stephanie King, told WIRED in an interview. “We own this.”

In its own statement to WIRED, McDonald’s agreed that Paradox.ai was to blame. “We’re disappointed by this unacceptable vulnerability from a third-party provider, Paradox.ai. As soon as we learned of the issue, we mandated Paradox.ai to remediate the issue immediately, and it was resolved on the same day it was reported to us,” the statement reads. “We take our commitment to cyber security seriously and will continue to hold our third-party providers accountable to meeting our standards of data protection.”